Some time ago, Kevin Marks told me about a strange little OS X application that comes with Apple Macs. It is called “Speak After Me”, and takes a bit of text, records the user speaking the text, cuts it up (the speech, not the user) into phonemes — at least that’s what the program calls them — and then maps a pitch contour to the transcription.

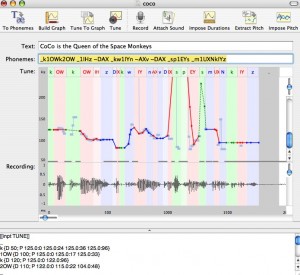

Here is a screenshot of Kevin saying “Coco is the queen of the space monkeys” (click on the image to enlarge). Highlighted in yellow is the transcription it generates, using a very odd phonemic (phonetic?) notation:

Not having a Mac, I don’t know if the program works for other languages than English. But even in English, it is clear that its phoneme inventory must be a weak point of the system: Written language to phoneme mapping just doesn’t work across all varieties of English. On the other hand, you apparently can edit the speech recording/transcription/pitch contour set, and the program also lets you export the data as a plain text file. It might be nice to play around with for a class of advanced ESL learners, or even in native-language education at the high school or post-secondary level.

According to Kevin, this is mainly a OS X demo application and part of the “developers’ install”, which is accessible to all Mac OS X users. Does anyone know more about it?

Related posts: Pseudo-phonetics, Quick links, Branding: IPA and exotism, Multi-lang II, Merriam-Webster & Firefox, Pas aussi atroce que ça !, Transcribing an unknown language

Technorati (tags): language, langue, OS X, outils, phonétique, phonetics, tools

Eek, that’s an ugly transcription system.

Bonjour,

D’après votre capture d’écran, ce logiciel détecterait différents indices présents dans le signal de parole en associant un “pattern acoustique” et une modélisation acoustique de chaque segment de la langue anglaise stockée par le logiciel. Quant aux accents régionaux, si le logiciel est bien conçu, il doit exister une marge de manoeuvre dans le processus de reconnaissance.

La reconnaissance de ces “patterns” s’appuie par exemple sur l’utilisation de la “courbe de tonalité” généralement appelée courbe de fréquence fondamentale qui permet de détecter les zones périodiques donc voisées (à l’inverse, on isole ainsi les segments sourds) présents dans le signal sonore.

The App is called ‘Repeat After Me’, and I must admit that I had never heard of it until you mentioned it. It is as you said, part of the Developer Tools. Here’s what the Repeat After Me User Guide has to say:

Repeat After Me is a tool that is designed to improve the pronunciation of text generated by the Text-To-Sppech (TTS) system, by means of editing the pitch and duration of phonemes.

I just don’t get why and when can it be used. And how it works.

I haven’t used the app in this way, but here’s my understanding of How and When you would use it based on Apple’s developer documentation:

Let’s say you’re an application developer who wants to have a certain set of phrases spoken by your app. First you attempt to let MacOS X’s Speech Synthesis framework generate the speech. It’ll probably do a good job, but what if it doesn’t have the stress or emphasis on the syllables you want? In that case, you simply start up RepeatAfterMe, speak the phrase(s) in the way you want them spoken, and generate a ‘pitch/duration phonemes representation’ in text form which you can then have your app pass to the Speech Synthesis framework in order to get a more natural pronunciation.

Is that a little clearer?